Modern cloud computing is not defined by compute alone. Though resources like Amazon EC2 give the processing power needed to execute applications, storage and databases form the foundation enabling persistence, scalability, and data-driven intelligence. Without reliable storage systems and calibrated databases, even the most sophisticated computing infrastructure would fail to offer corporate value.

This blog examines database and cloud storage solutions in depth, looks at their architecture, and illustrates how they interface with major computer systems such EC2 to support corporate workloads.

Understanding Cloud Storage Models

Cloud storage is designed to deliver durability, availability, and scalability beyond what traditional on-premises systems can offer. Storage services are typically categorized based on access patterns and performance requirements.

Object Storage

Object storage is optimized for scalability and durability rather than low-latency access. Services such as Amazon S3 store data as objects, making them ideal for backups, static website content, media assets, and data lakes. Object storage integrates tightly with EC2 by allowing compute instances to process massive datasets without maintaining local storage, enabling elastic and stateless application design.

Block Storage

Block storage provides low-latency, high-performance storage volumes that can be attached directly to compute instances such as EC2. Amazon EBS is commonly used for operating systems, transactional databases, and enterprise applications that require consistent performance. Block storage is essential for large EC2 deployments where predictable I/O performance directly impacts application responsiveness.

File Storage

File storage systems offer shared access using standard file protocols, supporting workloads such as content management systems, home directories, and enterprise applications. Services like Amazon EFS allow multiple EC2 instances to access the same file system simultaneously, enabling horizontal scaling without complex storage management.

Selecting the appropriate storage type ensures that compute resources are not constrained by data access limitations, maintaining performance efficiency across distributed environments.

Cloud Database Models and Use Cases

Databases transform raw storage into structured, queryable systems that support application logic and analytics. Cloud databases are categorized based on data structure and workload characteristics..

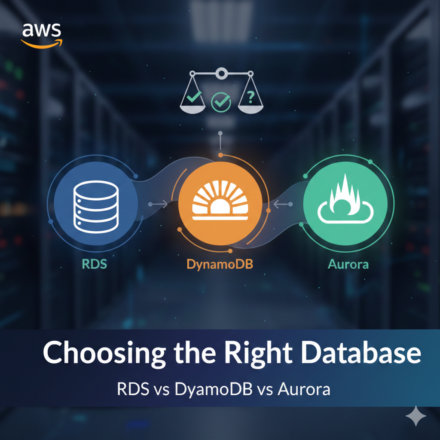

Relational Databases

Relational databases organize data into structured tables and enforce consistency through schemas and transactions. Managed services such as Amazon RDS and Aurora are widely used for enterprise applications, financial systems, and transactional workloads. These databases integrate with EC2 by handling data persistence while compute instances manage application logic, enabling clear separation of responsibilities.

NoSQL Databases

NoSQL databases prioritize scalability and flexibility over rigid schemas. Key-value, document, and wide-column databases support high-throughput workloads such as real-time analytics, IoT platforms, and user-session management. They are commonly paired with EC2-based microservices to support horizontally scalable architectures.

In-Memory Databases

In-memory databases provide extremely low latency by storing data directly in RAM. Services such as Amazon ElastiCache are critical for caching, session storage, and real-time data processing. These databases reduce load on EC2-hosted applications and backend databases, improving overall system performance.

Data Warehouses and Analytics Engines

Cloud-native data warehouses support large-scale analytics and reporting. These systems rely on powerful compute clusters and optimized storage layers to process massive datasets efficiently. EC2 instances often serve as data ingestion, transformation, or orchestration layers within these architectures.

The Relationship Between Compute, Storage, and Databases

Large-scale compute services such as EC2 do not operate in isolation. Performance, scalability, and cost efficiency are directly influenced by how storage and databases are designed and integrated.

- Compute instances rely on block and file storage for operating systems and application data.

- Databases depend on high-throughput storage and optimized networking to deliver consistent query performance.

- Object storage enables EC2 to process large datasets without scaling local disks, supporting data-intensive workloads.

- Object storage enables EC2 to process large datasets without scaling local disks, supporting data-intensive workloads.

- Auto Scaling compute environments require stateless architectures, which depend on externalized storage and managed databases.

In enterprise environments, this interdependence allows organizations to scale compute independently of data, reducing operational complexity and improving fault tolerance.

Optimization Strategies for Storage and Databases

Good optimization seeks to match compute patterns with data services in line with load features.

Appropriately scaling storage performance tiers helps to prevent paying too much for useless throughput. Lifecycle policies let automatic moving of rarely used data to less costly storage tiers. Database read replicas and caching layers reduce EC2 server computing load, so improving program responsiveness.

For large server environments, placing databases and storage resources in the same availability zones as EC2 lowers latency and data transfer costs. Using logs and data for continuous tracking ensures early identification of any performance issues.

Managing Costs and Operational Complexity

Examples of concealed expenses are over-provisioned databases, snapshots, unused storage capacity, and data transfer. Regular cost reviews, proper labeling, and automatic cleanup rules help to keep budget under control.

Managed storage and database solutions cut operating costs by removing roles like patching, backups, and replication control. This frees engineering teams to focus on making EC2-based apps better rather than managing the infrastructure.

Best Practices for Enterprise Storage & Database Design

- Match storage and database types to application access patterns

- Decouple compute from data to enable elastic scaling

- Use managed database services to improve reliability and reduce maintenance

- Implement caching to optimize performance and reduce compute load

- Continuously monitor usage, performance, and costs

Conclusion

Databases and cloud storage are essential for contemporary computer systems. While Amazon EC2 and similar products provide the ability to run applications, databases and storage ensure scalability, performance, and durability across a range of volumes. Designing these components as a whole rather than one at a time enables businesses to create more powerful, cost-effective, and high-performing cloud solutions.

By matching storage models, database engines, and compute resources with actual workload requirements, companies can maximize the potential of cloud infrastructure and produce solutions that scale gracefully with corporate growth.

Leave a Comment